The next test is two tags combined. This is where the alternative approaches really pull ahead of the traditional tagging approaches.

For example, here's the text tag query:

select doc_id

from doc_tags_text dt1

join doc_tags_text dt2

using (doc_id)

where dt1.tag = 'math'

and dt2.tag = 'physics'

order by doc_id limit 25;

The query for tag IDs is even worse:

select di1.doc_id

from doc_tags_id di1

join doc_tags_id di2

on di1.doc_id = di2.doc_id

join tags tags1

on di1.tag_id = tags1.tag_id

join tags tags2

on di2.tag_id = tags2.tag_id

where tags1.tag = 'thrift shop'

and tags2.tag = 'blogging'

order by di1.doc_id limit 25;

Imagine how either of these would look for three tags, or four. Now compare that with the JSONB and array queries:

select doc_id

from doc_tags_array

where tags @> array['math','physics']

order by doc_id limit 25;

with find_docs as (

select doc_id

from doc_tags_json

where tags @> '[ "thrift shop", "blogging" ]'

)

select * from find_docs

order by doc_id limit 25;

(the dodge with the WITH clause is to force use of the JSONB index, per Part 2)

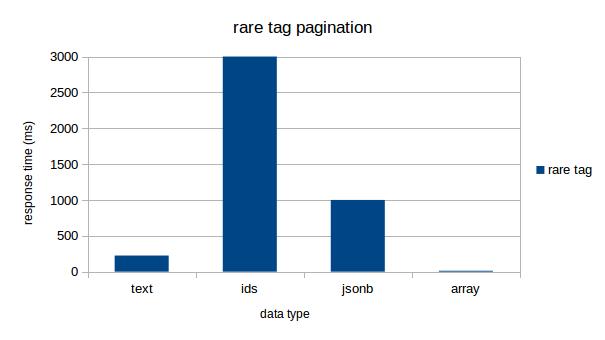

Big difference, eh? It can probably be taken as a given that if you need to do searches which involve combining two or more tags, you really want to use a GIN indexed approach just for code maintainability. Just in case, though, let's look at performance, both for combining two common tags ("math" and "physics", for 957 hits), and two rare tags ("thrift shop" and "blogging", which only have 5 hits). The differences in performance in the approaches were so extreme, I have to use a logarithmic scale for this graph, and am providing the raw numbers:

That's a huge difference. The "JSONB fixed" numbers are for a query where I force the planner to use the JSONB index instead of letting it choose its own path (using the doc_id index). As you can see, two-tag search via GIN index is an order of magnitude faster for common tags, and three orders of magnitude faster for rare tags. And the ID approach completely bombs for two-tag search.

For our final test, we'll build a tag cloud from scratch. This involves pulling counts of all distinct tags and then taking the top 100. In a real production environment, you wouldn't do things this way; you'd maintain counts by trigger, or use HyperLogLog, or something similar. But it makes a good test of mass index access and/or table scans for the various approaches.

Here the JSONB and array queries get annoying. Examples:

select count(*) as tag_count, tag

from doc_tags_json

join tags on doc_tags_json.tags @> to_json(tags.tag)::jsonb

group by tag

order by tag_count desc limit 100;

select count(*) as tag_count, tag

from doc_tags_array

join tags on doc_tags_array.tags @> array[tags.tag::text]

group by tag

order by tag_count desc limit 100;

As I mentioned at the beginning of this series, arrays don't automatically prevent duplicate entries. If I don't care that much about accuracy (and generally for tag clouds one doesn't) I can use a faster query with UNNEST:

select count(*) as tag_count, ut.tag

from doc_tags_array,

lateral unnest(doc_tags_array.tags) as ut(tag)

group by ut.tag

order by tag_count desc limit 100;

(the lateral unnest is a 9.4 feature, and darned useful). Let's look at execution times:

So, here you can see that the array and JSONB approaches lose; they simply can't be as fast as a plain text column for building a count. This does mean that if your application spends a lot of its time dynamically building tag clouds, arrays might not be the way to go and JSONB certainly isn't.

Conclusion: the overall winner is an array of text, with a GIN index. This is better for one-tag searches, worlds faster for two-tag searches, and competitive at other tasks. It's also the smallest representation, and becomes smaller and faster still if you actually put the array of tags in the documents table. Still, there are times that you would want to use the traditional child table with plain text tags: if you build tag clouds a lot or if you never search for two tags and your ORM can't deal with Postgres arrays.

Sadly, I have to conclude that my personal favorite, JSONB, isn't quite ready for this use. See discussions on the pgsql-performance list regarding how to estimate selectivity for JSONB contains.

I realize that I did not test comparative write speeds. Maybe next time; I've already torn down the AWS instance.