You can check out the original survey here, although we're no longer paying attention to responses.

Demographics

First let's find out who our surveyees are. 108 people responded. Given that VM and I broadcasted the survey over Twitter, as did Josh Simmons and Gareth Greenaway, I expect that our sample will be fairly skewed; let's see. First we asked people whether they just attend talks, or whether they both give and attend them.

As you can see, our sample skews heavily towards presenters, with a minority of pure audience members. A few wiseacres said they only give talks, and don't attend them, which wasn't supported by their other answers. We also asked how many conferences and talks folks went to in the last year:

So, most of our respondees are frequent conference and/or talk attendees. This colors the rest of the survey; what we're looking at is what a group of experienced people who go to a lot of talks, and present more than a few, think of other speakers' performance. I suspect that if we did a survey of folks at their very first tech conference, we would see somewhat different data.

The Good

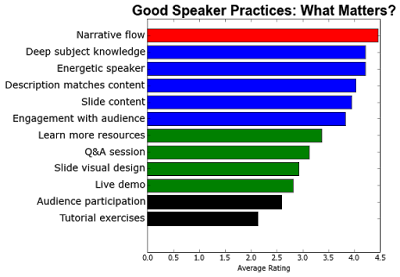

We asked "Thinking of the presentations you attended with topics and/or speakers you've most enjoyed, what speaker behaviors below do you feel substantially added to the experience?". The idea of this question was to find out what skills or actions by the speaker were the most important for making a talk valuable. Each item was rated on a 1-5 scale.

The above graph requires some explanation. The length of the bars is the average score. The bars are ranked from the highest to lowest rated practice. The color of the bars indicates the median score, for cases where the average is deceptively skewed: Red 5, Blue 4, Green 3, and Black 2.

We can see a few things from this. First, no practice was considered completely unimportant; nothing averaged below 2.1. Good narrative structure was clearly the best liked practice, with deep subject knowledge and being an energetic speaker as our second and third ranked items.

If you look at the overall rankings, you can see that "content" items are the best loved, whereas talk "extras" and technical improvements are the least important. Slide visual design, which many speakers spend a lot of time sweating over, just averages 3.0, and the three items involving technical interaction with the audience (demos, participation, and exercises), come in last. I do wonder whether those three are last because they are so seldom done well, even by experienced speakers, or because they really are unimportant. Let me know what you think in the comments.

The Bad

To balance this out, we asked surveyees, "Thinking of the presentations you attended with topics and/or speakers you liked, what speaker behaviors below do you feel substantially detracted from, or even ruined, an otherwise good presentation?" The idea was not to find out why sucky presentations sucked, but what things prevented an acceptable talk from being good. Here's the ratings, which are graphed the same way as the "good" ratings:

The top two speaking problems were not being able to hear or understand the speaker, and the presenter being bored and/or distracted. The first shows how important diction, projection, and AV systems are to having a good talk -- especially if you're speaking in a language which isn't your primary one. The second is consistent with the good speaker ratings: people love energetic speakers, and if speakers are listless, so is the audience. So make sure to shoot that triple espresso before you go on stage.

There were a few surprises in here for me. Unreadable code on slides, one of my presonal pet peeves, was a middle-ranker. Running over or under time, which I expected to be a major talk-ruiner, was not considered to be. Offensive content, on the other hand, is a much more widespread problem than I would have assumed.

And "ums" and body language problems, however, ranked at the bottom. From my own observation, I know that these are common issues, but apparently they don't bother people that much. Or do they?

The Ugly

Finally, we asked, "If you could magically eliminate just one bad speaker behavior from all presenters everywhere, which one would it be?" The idea was to find out what one thing was really driving audiences bananas, it was so bad. Here's the responses:

So, first, there are a few things which are inconsistent with the ratings. Unpracticed presentations were the #1 issue, and some other items which looked unimportant by rating, like saying "um" a lot, show up as a top pet peeve. So apparently people hate "um" and interruptions a lot, but they don't see them ruining that many otherwise good talks. The other top issues match the ratings we already saw.

About a seventh of the responses were ones which received only one or two votes, including an assortment of write-in responses. Here's a few of the more interesting ones:

- "Reading slide content to audience" (two votes, including a page-long tirade)

- "Giving the talk using slides that are really optimized to be downloaded by someone who isn't able to attend the talk. Presentation slides and downloadable slides are different beasts."

- "Long personal company or employment history introductions"

On to the Tutorial

So this survey will give us enough information to know what things we can cut back on in order to make our timeslot ... and even a few things to spend more time on! If you want to learn more about how to be a great speaker, join us at SCALE14 on the morning of Thursday, January 21.

If you just like the graphs, both the graphs and the IPython notebook used to produce them are on our Github repo.